In this project, we aim to gain a deeper understanding of adversarial losses by decoupling the effects of their component functions and regularization terms. In essence, we aim for the following two research questions:

- What certain types of component functions are theoretically valid adversarial loss functions?

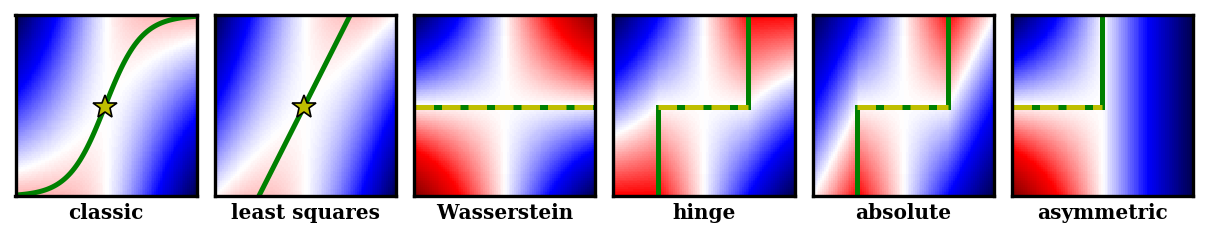

- How different combinations of the component functions and the regularization approaches perform empirically against one another?

For the first question, we derive some necessary and sufficient conditions of the component functions such that the adversarial loss is a divergence-like measure between the data and the model distributions. For more details, please refer to our paper (warning: lots of math!).

For the second question, we propose a new, simple framework called DANTest for comparing different adversarial losses. With DANTest, we are able to decouple the effects of component functions and the regularization approaches. In other words, we would like to know which one of them makes an adversarial loss better than another. Learn more about the DANTest.